Language Model Tool API

Language model tools enable you to extend the functionality of a large language model (LLM) in chat with domain-specific capabilities. To process a user's chat prompt, agents in VS Code can automatically invoke these tools to perform specialized tasks as part of the conversation.

By contributing a language model tool in your VS Code extension, you can extend the agentic coding workflow while also providing deep integration with the editor. Extension tools are one of three types of tools available in VS Code, alongside built-in tools and MCP tools.

In this extension guide, you learn how to create a language model tool by using the Language Model Tools API and how to implement tool calling in a chat extension.

You can also extend the chat experience with specialized tools by contributing an MCP server. See the AI Extensibility Overview for details on the different options and how to decide which approach to use.

For information about using tools as an end user, see Use tools in chat.

What is tool calling in an LLM?

A language model tool is a function that can be invoked as part of a language model request. For example, you might have a function that retrieves information from a database, performs some calculation, or calls an online API. When you contribute a tool in a VS Code extension, agent mode can then invoke the tool based on the context of the conversation.

The LLM never actually executes the tool itself, instead the LLM generates the parameters that are used to call your tool. It's important to clearly describe the tool's purpose, functionality, and input parameters so that the tool can be invoked in the right context.

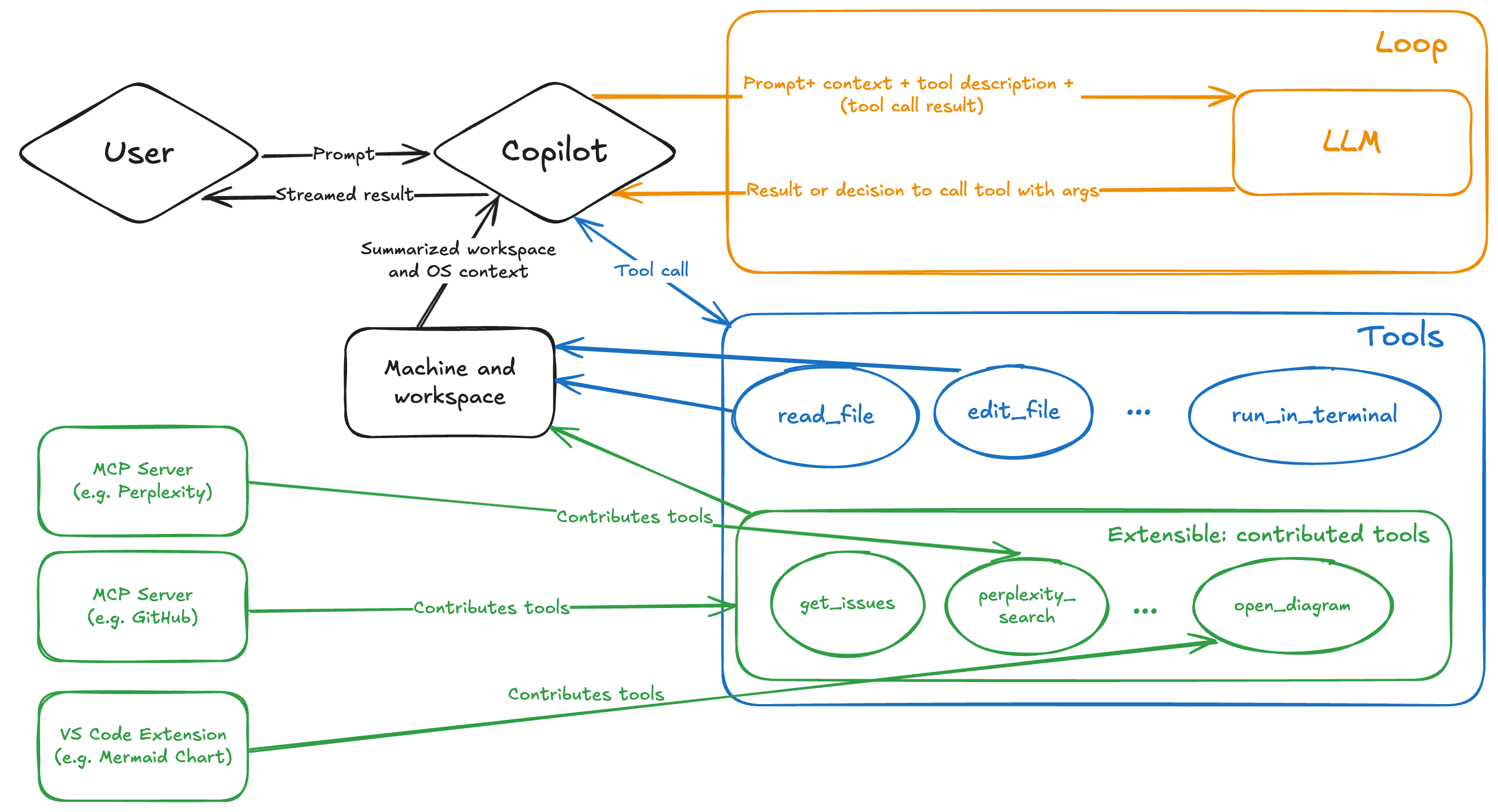

The following diagram shows the tool-calling flow in agent mode in VS Code. See Tool-calling flow for details about the specific steps involved.

Read more about function calling in the OpenAI documentation.

Why implement a language model tool in your extension?

Implementing a language model tool in your extension has several benefits:

- Extend agent mode with specialized, domain-specific tools that are automatically invoked as part of responding to a user prompt. For example, enable database scaffolding and querying to dynamically provide the LLM with relevant context.

- Deeply integrate with VS Code by using the broad set of extension APIs. For example, use the debug APIs to get the current debugging context and use it as part of the tool's functionality.

- Distribute and deploy tools via the Visual Studio Marketplace, providing a reliable and seamless experience for users. Users don't need a separate installation and update process for your tool.

You might consider implementing a language model tool with an MCP server in the following scenarios:

- You already have an MCP server implementation and also want to use it in VS Code.

- You want to reuse the same tool across different development environments and platforms.

- Your tool is hosted remotely as a service.

- You don't need access to VS Code APIs.

Learn more about the differences between tool types.

Create a language model tool

Implementing a language model tool consists of two main parts:

- Define the tool's configuration in the

package.jsonfile of your extension. - Implement the tool in your extension code by using the Language Model API reference

You can get started with a basic example project.

1. Static configuration in package.json

The first step to define a language model tool in your extension is to define it in the package.json file of your extension. This configuration includes the tool name, description, input schema, and other metadata:

-

Add an entry for your tool in the

contributes.languageModelToolssection of your extension'spackage.jsonfile. -

Give the tool a unique name:

Property Description nameThe unique name of the tool, used to reference the tool in the extension implementation code. Format the name in the format {verb}_{noun}. See naming guidelines.displayNameThe user-friendly name of the tool, used for displaying in the UI. -

If the tool can be used with agents or referenced in a chat prompt with

#, add the following properties:Users can enable or disable the tool in the Chat view, similar to how this is done for Model Context Protocol (MCP) tools.

Property Description canBeReferencedInPromptSet to trueif the tool can be used with agents or referenced in chat.toolReferenceNameThe name for users to reference the tool in a chat prompt via #.iconThe icon to display for the tool in the UI. userDescriptionUser-friendly description of the tool, used for displaying in the UI. -

Add a detailed description in

modelDescription. This information is used by the LLM to determine in which context your tool should be used.- What exactly does the tool do?

- What kind of information does it return?

- When should and shouldn't it be used?

- Describe important limitations or constraints of the tool.

-

If the tool takes input parameters, add an

inputSchemaproperty that describes the tool's input parameters.This JSON schema describes an object with the properties that the tool takes as input, and whether they are required. File paths should be absolute paths.

Describe what each parameter does and how it relates to the tool's functionality.

-

Add a

whenclause to control when the tool is available.The

languageModelToolscontribution point lets you restrict when a tool is available for agent mode or can be referenced in a prompt by using a when clause. For example, a tool that gets the debug call stack information, should only be available when the user is debugging."contributes": { "languageModelTools": [ { "name": "chat-tools-sample_tabCount", ... "when": "debugState == 'running'" } ] }

Example tool definition

The following example shows how to define a tool that counts the number of active tabs in a tab group.

"contributes": {

"languageModelTools": [

{

"name": "chat-tools-sample_tabCount",

"tags": [

"editors",

"chat-tools-sample"

],

"toolReferenceName": "tabCount",

"displayName": "Tab Count",

"modelDescription": "The number of active tabs in a tab group in VS Code.",

"userDescription": "Count the number of active tabs in a tab group.",

"canBeReferencedInPrompt": true,

"icon": "$(files)",

"inputSchema": {

"type": "object",

"properties": {

"tabGroup": {

"type": "number",

"description": "The index of the tab group to check. This is optional- if not specified, the active tab group will be checked.",

"default": 0

}

}

}

}

]

}

2. Tool implementation

Implement the language model tool by using the Language Model API. This consists of the following steps:

-

On activation of the extension, register the tool with

vscode.lm.registerTool.Provide the name of the tool as you specified it in the

nameproperty inpackage.json.If you want the tool to be private to your extension, skip the tool registration step.

export function registerChatTools(context: vscode.ExtensionContext) { context.subscriptions.push( vscode.lm.registerTool('chat-tools-sample_tabCount', new TabCountTool()) ); } -

Create a class that implements the

vscode.LanguageModelTool<>interface. -

Add tool confirmation messages in the

prepareInvocationmethod.A generic confirmation dialog will always be shown for tools from extensions, but the tool can customize the confirmation message. Give enough context to the user to understand what the tool is doing. The message can be a

MarkdownStringcontaining a code block.The following example shows how to provide a confirmation message for the tab count tool.

async prepareInvocation( options: vscode.LanguageModelToolInvocationPrepareOptions<ITabCountParameters>, _token: vscode.CancellationToken ) { const confirmationMessages = { title: 'Count the number of open tabs', message: new vscode.MarkdownString( `Count the number of open tabs?` + (options.input.tabGroup !== undefined ? ` in tab group ${options.input.tabGroup}` : '') ), }; return { invocationMessage: 'Counting the number of tabs', confirmationMessages, }; }If

prepareInvocationreturnedundefined, the generic confirmation message will be shown. Note that the user can also select to "Always Allow" a certain tool. -

Define an interface that describes the tool input parameters.

The interface is used in the

invokemethod of thevscode.LanguageModelToolclass. The input parameters are validated against the JSON schema you defined in theinputSchemainpackage.json.The following example shows the interface for the tab count tool.

export interface ITabCountParameters { tabGroup?: number; } -

Implement the

invokemethod. This method is called when the language model tool is invoked while processing a chat prompt.The

invokemethod receives the tool input parameters in theoptionsparameter. The parameters are validated against the JSON schema defined ininputSchemainpackage.json.When an error occurs, throw an error with a message that makes sense to the LLM. Optionally, provide instructions on what the LLM should do next, such as retrying with different parameters, or performing a different action.

The following example shows the implementation of the tab count tool. The result of the tool is an instance of type

vscode.LanguageModelToolResult.async invoke( options: vscode.LanguageModelToolInvocationOptions<ITabCountParameters>, _token: vscode.CancellationToken ) { const params = options.input; if (typeof params.tabGroup === 'number') { const group = vscode.window.tabGroups.all[Math.max(params.tabGroup - 1, 0)]; const nth = params.tabGroup === 1 ? '1st' : params.tabGroup === 2 ? '2nd' : params.tabGroup === 3 ? '3rd' : `${params.tabGroup}th`; return new vscode.LanguageModelToolResult([new vscode.LanguageModelTextPart(`There are ${group.tabs.length} tabs open in the ${nth} tab group.`)]); } else { const group = vscode.window.tabGroups.activeTabGroup; return new vscode.LanguageModelToolResult([new vscode.LanguageModelTextPart(`There are ${group.tabs.length} tabs open.`)]); } }

View the full source code for implementing a language model tool in the VS Code Extension Samples repository.

Tool-calling flow

When a user sends a chat prompt, the following steps occur:

-

Copilot determines the list of available tools based on the user's configuration.

The list of tools consists of built-in tools, tools registered by extensions, and tools from MCP servers. You can contribute to agent mode via extensions or MCP servers (shown in green in the diagram).

-

Copilot sends the request to the LLM and provides it with the prompt, chat context, and the list of tool definitions to consider.

The LLM generates a response, which might include one or more requests to invoke a tool.

-

If needed, Copilot invokes the suggested tool(s) with the parameter values provided by the LLM.

A tool response might result in more requests for tool invocations.

-

If there are errors or follow-up tool requests, Copilot iterates over the tool-calling flow until all tool requests are resolved.

-

Copilot returns the final response to the user, which might include responses from multiple tools.

Guidelines and conventions

-

Naming: write clear and descriptive names for tools and parameters.

-

Tool name: should be unique, and clearly describe their intent. Structure the tool name in the format

{verb}_{noun}. For example,get_weather,get_azure_deployment, orget_terminal_output. -

Parameter name: should describe the parameter's purpose. Structure the parameter name in the format

{noun}. For example,destination_location,ticker, orfile_name.

-

-

Descriptions: write detailed descriptions for tools and parameters.

- Describe what the tool does and when it should and shouldn't be used. For example, "This tool retrieves the weather for a given location."

- Describe what each parameter does and how it relates to the tool's functionality. For example, "The

destination_locationparameter specifies the location for which to retrieve the weather. It should be a valid location name or coordinates." - Describe important limitations or constraints of the tool. For example, "This tool only retrieves weather data for locations in the United States. It might not work for other regions."

-

User confirmation: provide a confirmation message for the tool invocation. A generic confirmation dialog will always be shown for tools from extensions, but the tool can customize the confirmation message. Give enough context to the user to understand what the tool is doing.

-

Error handling: when an error occurs, throw an error with a message that makes sense to the LLM. Optionally, provide instructions on what the LLM should do next, such as retrying with different parameters, or performing a different action.

Get more best practices for creating tools in the OpenAI documentation and Anthropic documentation.