AI language models in VS Code

Visual Studio Code offers different built-in language models that are optimized for different tasks. You can also bring your own language model API key to use models from other providers. This article describes how to change the language model for chat or inline suggestions, and how to use your own API key.

Choose the right model for your task

By default, chat uses a base model to provide fast, capable responses for a wide range of tasks, such as coding, summarization, knowledge-based questions, reasoning, and more.

However, you are not limited to using only this model. You can choose from a selection of language models, each with its own particular strengths. For a detailed comparison of AI models, see Choosing the right AI model for your task in the GitHub Copilot documentation.

Depending on the agent you are using, the list of available models might be different. For example, in agent mode, the list of models is limited to those that have good support for tool calling.

If you are a Copilot Business or Enterprise user, your administrator needs to enable certain models for your organization by opting in to Editor Preview Features in the Copilot policy settings on GitHub.com.

Change the model for chat conversations

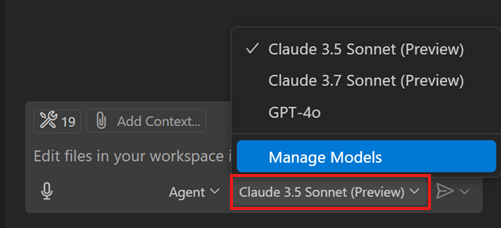

Use the language model picker in the chat input field to change the model that is used for chat conversations and code editing.

Install the AI Toolkit extension to add more language models to enhance GitHub Copilot capabilities.

For more information, see Change the chat model.

You can further extend the list of available models by using your own language model API key.

If you have a paid Copilot plan, the model picker shows the premium request multiplier for premium models. Learn more about premium requests in the GitHub Copilot documentation.

Auto model selection

Auto model selection is available as of VS Code release 1.104.

With auto model selection, VS Code automatically selects a model to ensure that you get the optimal performance and reduce rate limits due to excessive usage of particular language models. It detects degraded model performance and uses the best model at that point in time. We continue to improve this feature to pick the most suitable model for your needs.

To use auto model selection, select Auto from the model picker in chat.

Currently, auto chooses between Claude Sonnet 4, GPT-5, GPT-5 mini and other models. If your organization has opted out of certain models, auto will not select those models. If none of these models are available or you run out of premium requests, auto will fall back to a model at 0x multiplier.

Multiplier discounts

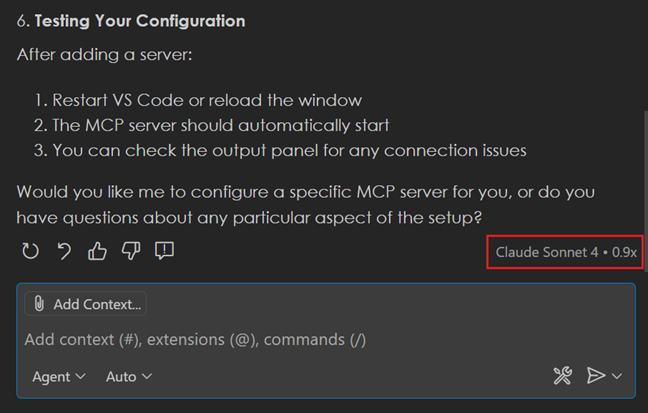

When using auto model selection, VS Code uses a variable model multiplier, based on the selected model. If you are a paid user, auto will apply a request discount.

At any time, you can see which model and model multiplier are used by hovering over the chat response.

Manage language models

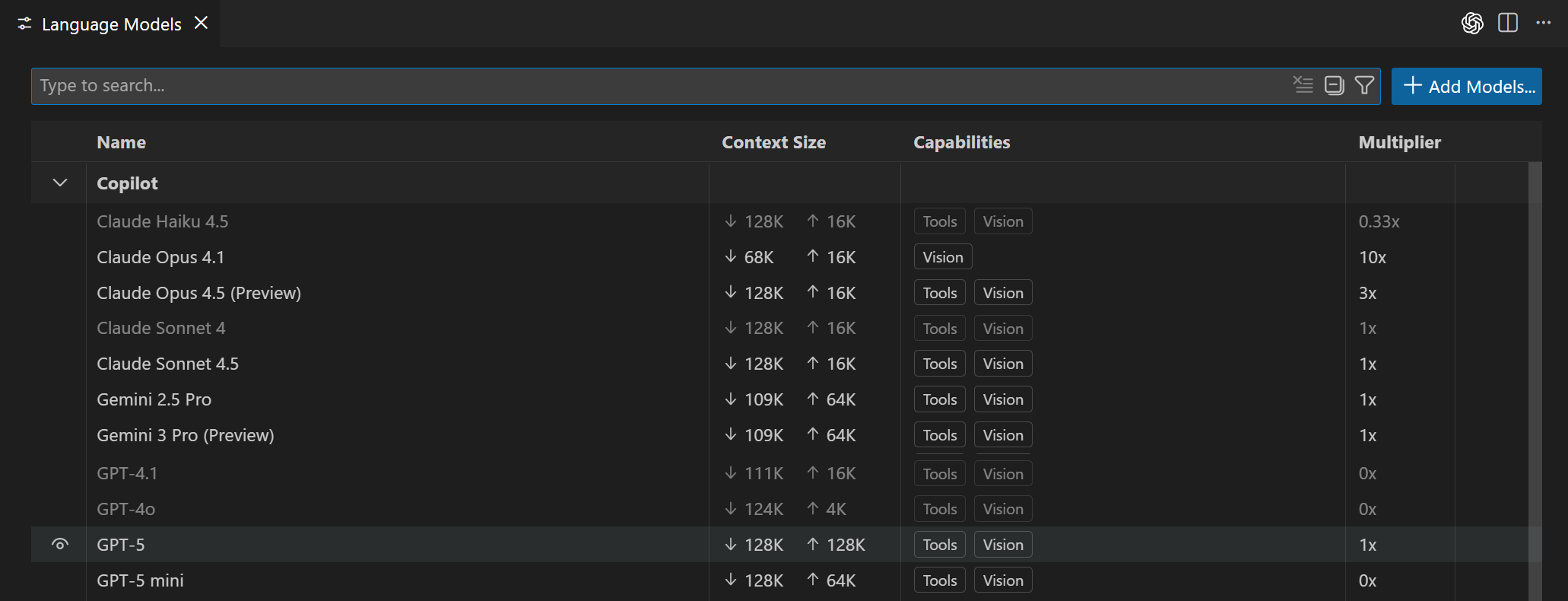

You can use the language models editor to view all avalable models, choose which models are shown in the model picker, and add more models by adding from built-in providers or from extension-provided model providers.

To open the Language Models editor, open the model picker in the Chat view and select Manage Models or run the Chat: Manage Language Models command from the Command Palette.

The editor lists all models available to you, showing key information such as the model capabilities, context size, billing details, and visibility status. By default, models are grouped by provider, but you can also group them by visibility.

You can search and filter models by using the following options:

- Text search with the search box

- Provider:

@provider:"OpenAI" - Capability:

@capability:tools,@capability:vision,@capability:agent - Visibility:

@visible:true/false

Customize the model picker

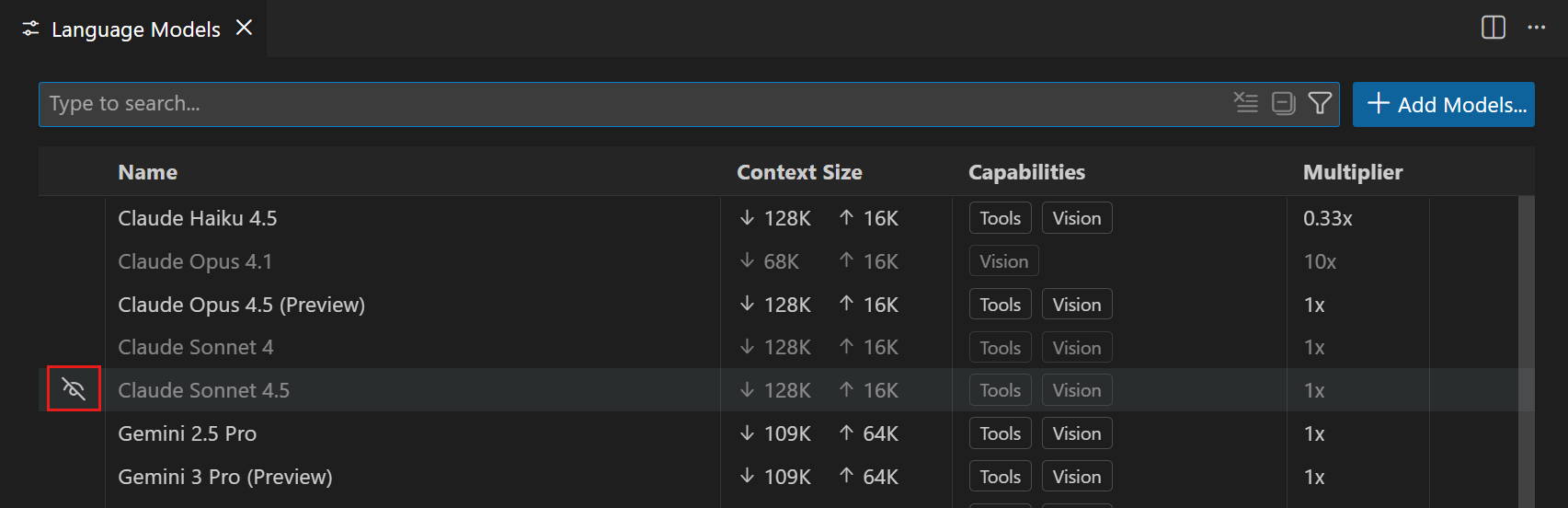

You can customize which models are shown in the model picker by changing the visibility status of models in the Language Models editor. You can show or hide models from any provider.

Hover over a model in the list and select the eye icon to show or hide the model in the model picker.

Bring your own language model key

This feature is not currently available to Copilot Business or Copilot Enterprise users.

GitHub Copilot in VS Code comes with a variety of built-in language models that are optimized for different tasks. If you want to use a model that is not available as a built-in model, you can bring your own language model API key (BYOK) to use models from other providers.

Using your own language model API key in VS Code has several benefits:

- Model choice: access hundreds of models from different providers, beyond the built-in models.

- Experimentation: experiment with new models or features that are not yet available in the built-in models.

- Local compute: use your own compute for one of the models already supported in GitHub Copilot or to run models not yet available.

- Greater control: by using your own key, you can bypass the standard rate limits and restrictions imposed on the built-in models.

VS Code provides different options to add more models:

-

Use one of the built-in model providers

-

Install a language model provider extension from the Visual Studio Marketplace, for example, AI Toolkit for VS Code with Foundry Local

Considerations when using bring your own model key

- Only applies to the chat experience and doesn't affect inline suggestions or other AI-powered features in VS Code.

- Capabilities are model-dependent and might differ from the built-in models, for example, support for tool calling, vision, or thinking.

- The Copilot service API is still used for some tasks, such as sending embeddings, repository indexing, query refinement, intent detection, and side queries.

- There is no guarantee that responsible AI filtering is applied to the model's output when using BYOK.

Add a model from a built in provider

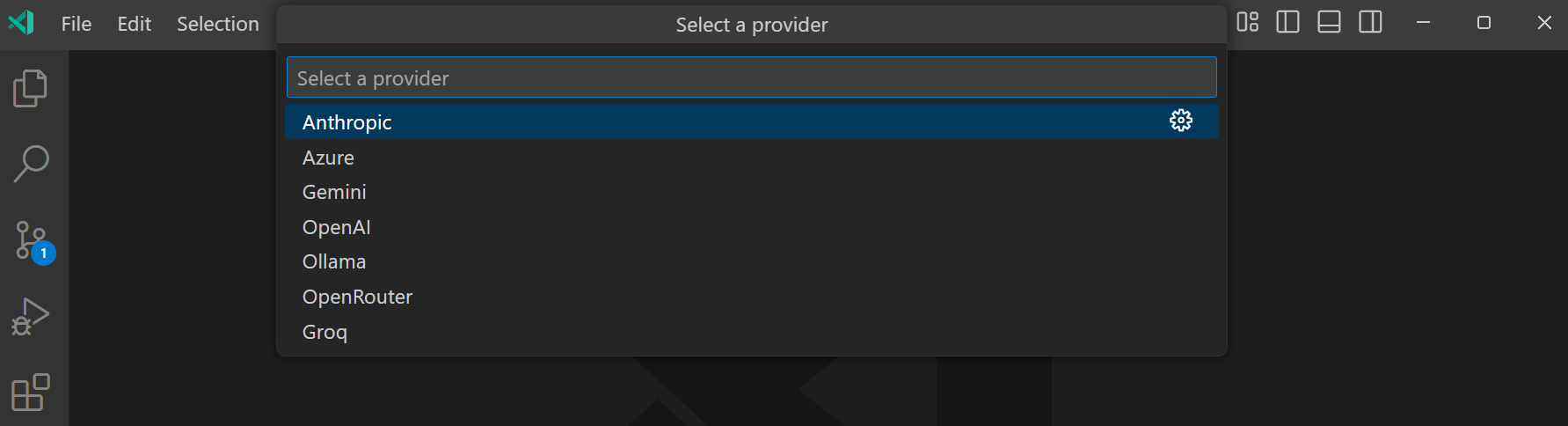

VS Code supports several built-in model providers that you can use to add more models to the model picker in chat.

To configure a language model from a built-in provider:

-

Select Manage Models from the language model picker in the Chat view or run the Chat: Manage Language Models command from the Command Palette.

-

In the Language Models editor, select Add Models, and then select a model provider from the list.

-

Enter the provider-specific details, such as the API key or endpoint URL.

-

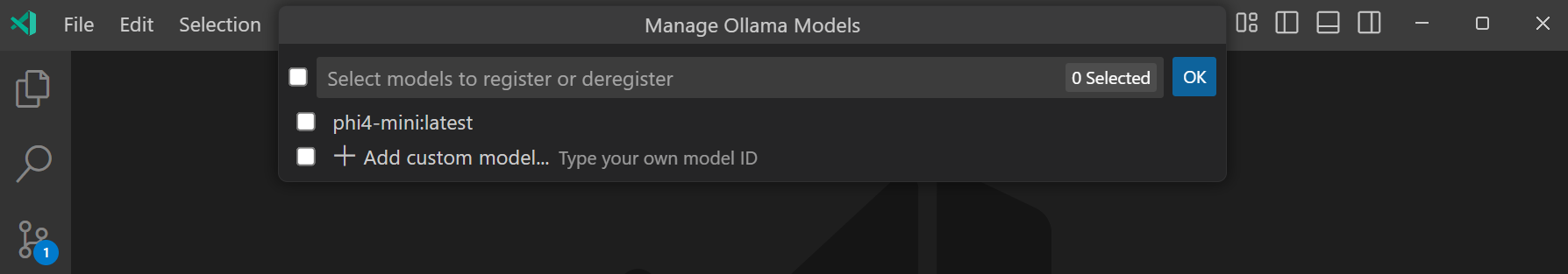

Depending on the provider, enter the model details or select a model from the list.

The following screenshot shows the model picker for Ollama running locally, with the Phi-4 model deployed.

-

You can now select the model from the model picker in chat.

For a model to be available when using agents, it must support tool calling. If the model doesn't support tool calling, it won't be shown in the model picker.

Configuring a custom OpenAI-compatible model is currently only available in VS Code Insiders as of release 1.104. You can also manually add your OpenAI-compatible model configuration in the

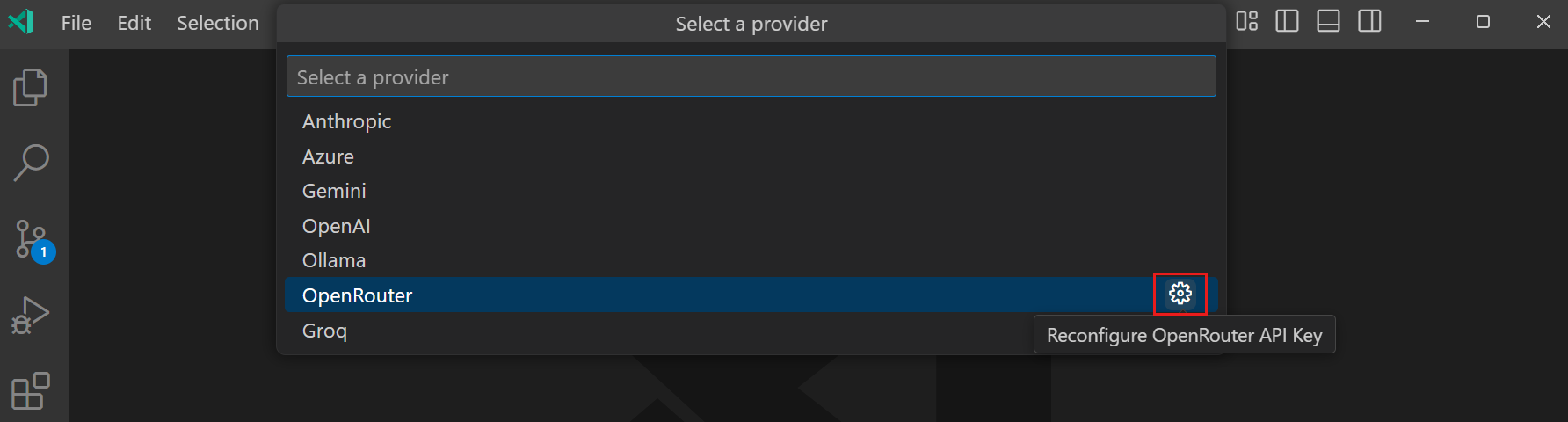

Update model provider details

To update the details of a model provider you have configured previously:

-

Select Manage Models from the language model picker in the Chat view or run the Chat: Manage Language Models command from the Command Palette.

-

In the Language Models editor, select the gear icon for the model provider you want to update.

-

Update the provider details, such as the API key or endpoint URL.

Change the model for inline chat

You can configure a default language model for editor inline chat. This enables you to use a different model for inline chat than for chat conversations.

To configure the default model for inline chat, use the

If you change the model during an inline chat session, the selection persists for the remainder of the session. After you reload VS Code, the model resets to the value specified in the

Change the model for inline suggestions

To change the language model that is used for generating inline suggestions in the editor:

-

Select Configure Inline Suggestions... from the Chat menu in the VS Code title bar.

-

Select Change Completions Model..., and then select one of the models from the list.

The models that are available for inline suggestions might evolve over time as we add support for more models.

Frequently asked questions

Why is bring your own model key not available for Copilot Business or Copilot Enterprise?

Bringing your own model key is not available for Copilot Business or Copilot Enterprise because it's mainly meant to allow users to experiment with the newest models the moment they are announced, and not yet available as a built-in model in Copilot.

Bringing your own model key will come to Copilot Business and Enterprise plans later this year, as we better understand the requirements that organizations have for using this functionality at scale. Copilot Business and Enterprise users can still use the built-in, managed models.

Can I use locally hosted models with Copilot in VS Code?

You can use locally hosted models in chat by using bring your own model key (BYOK) and using a model provider that supports connecting to a local model. You have different options to connect to a local model:

- Use a built-in model provider that supports local models

- Install an extension from the Visual Studio Marketplace, for example, AI Toolkit for VS Code with Foundry Local

Currently, you cannot connect to a local model for inline suggestions. VS Code provides an extension API InlineCompletionItemProvider that enables extensions to contribute a custom completion provider. You can get started with our Inline Completions sample.

Currently, using a locally hosted models still requires the Copilot service for some tasks. Therefore, your GitHub account needs to have access to a Copilot plan (for example, Copilot Free) and you need to be online. This requirement might change in a future release.

Can I use a local model without an internet connection?

Currently, using a local model requires access to the Copilot service and therefore requires you to be online. This requirement might change in a future release.

Can I use a local model without a Copilot plan?

No, currently you need to have access to a Copilot plan (for example, Copilot Free) to use a local model. This requirement might change in a future release.