AI language models in VS Code

Copilot in Visual Studio Code offers different built-in language models that are optimized for different tasks. You can also bring your own language model API key to use models from other providers. This article describes how to change the language model for chat or code completions, and how to use your own API key.

Choose the right model for your task

By default, chat uses a base model to provide fast, capable responses for a wide range of tasks, such as coding, summarization, knowledge-based questions, reasoning, and more.

However, you are not limited to using only this model. You can choose from a selection of language models, each with its own particular strengths. You may have a favorite model that you like to use, or you might prefer to use a particular model for inquiring about a specific subject. For a detailed comparison of AI models, see Choosing the right AI model for your task in the GitHub Copilot documentation.

Depending on the chat mode you are using, the list of available models might be different. For example, in agent mode, the list of models is limited to those that have good support for tool calling.

If you are a Copilot Business or Enterprise user, your administrator needs to enable certain models for your organization by opting in to Editor Preview Features in the Copilot policy settings on GitHub.com.

Why use your own language model API key?

In addition to the built-in models, you can access models directly from Anthropic, Azure, Google, Groq, OpenAI, OpenRouter, or Ollama by providing a valid API key. Learn how to use your own API in VS Code.

Using your own language model API key in VS Code has several advantages:

- Model choice: access hundreds of models from different providers, beyond the built-in models.

- Experimentation: experiment with new models or features that are not yet available in the built-in models.

- Local compute: use your own compute for one of the models already supported in GitHub Copilot or to run models not yet available.

- Greater control: by using your own key, you can bypass the standard rate limits and restrictions imposed on the built-in models.

This feature is in preview and is not currently available to Copilot Business or Copilot Enterprise users.

Change the model for chat conversations

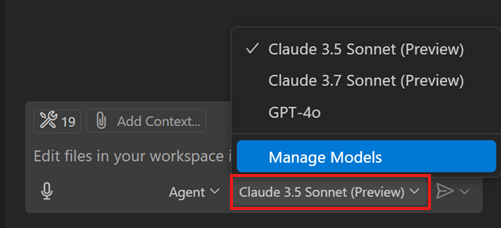

Use the language model picker in the chat input field to change the model that is used for chat conversations and code editing.

You can further extend the list of available models by using your own language model API key.

If you have a paid Copilot plan, the model picker shows the premium request multiplier for premium models. Learn more about premium requests in the GitHub Copilot documentation.

Customize the model picker

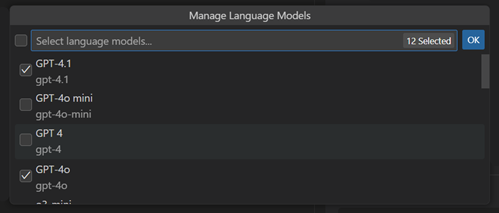

You can customize which models you want to show in the model picker:

-

Open the model picker and select Manage Models.

-

In the provider list, select Copilot.

-

Select the models you want to show in the model picker.

Change the model for code completions

To change the language model that is used for generating code completions in the editor:

-

Select Configure Code Completions... from the Copilot menu in the VS Code title bar.

-

Select Change Completions Model..., and then select one of the models from the list.

Bring your own language model key

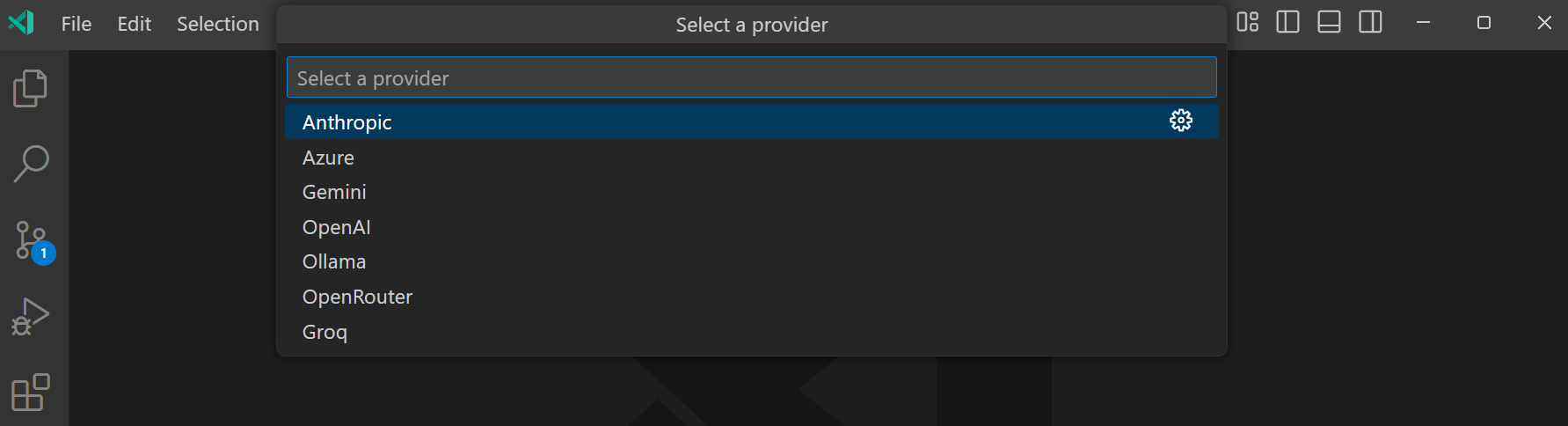

If you already have an API key for a language model provider, you can use their models in chat in VS Code, in addition to the built-in models that Copilot provides. You can use models from the following providers: Anthropic, Azure, Google Gemini, Groq, Ollama, OpenAI, and OpenRouter.

This feature is in preview and is not currently available to Copilot Business or Copilot Enterprise users.

To manage the available models for chat:

-

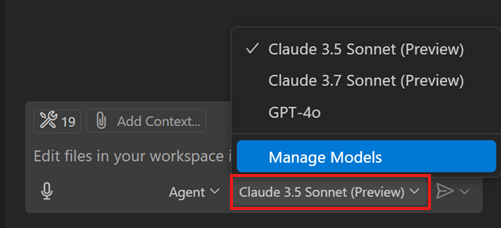

Select Manage Models from the language model picker in the Chat view.

Alternatively, run the GitHub Copilot: Manage Models command from the Command Palette.

-

Select a model provider from the list.

-

Enter the provider-specific details, such as the API key or endpoint URL.

-

Enter the model details or select a model from the list, if available for the provider.

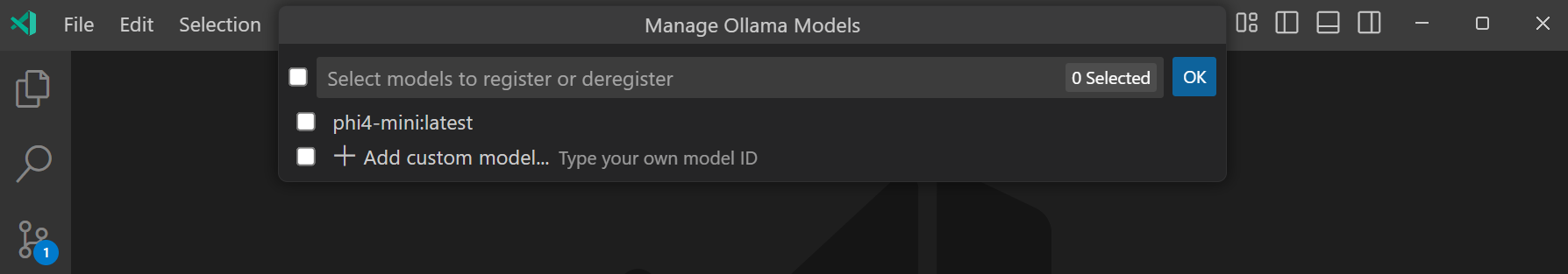

The following screenshot shows the model picker for Ollama running locally, with the Phi-4 model deployed.

-

You can now select the model from the model picker in the Chat view and use it for chat conversations.

Update the provider details

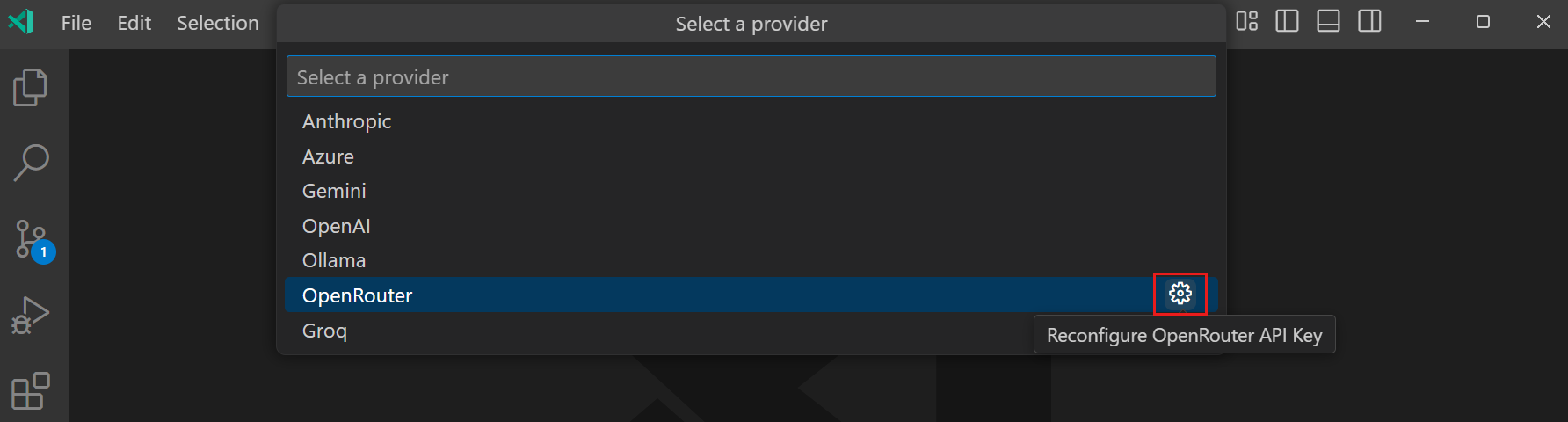

To update the provider details, such as the API key or endpoint URL:

-

Select Manage Models from the language model picker in the Chat view.

Alternatively, run the GitHub Copilot: Manage Models command from the Command Palette.

-

Hover over a model provider in the list, and select the gear icon to edit the provider details.

-

Update the provider details, such as the API key or endpoint URL.

Considerations

There are a number of considerations when using your own language model API key in VS Code:

- Bringing your own model only applies to the chat experience and doesn't impact code completions or other AI-powered features in VS Code, such as commit-message generation.

- The capabilities of each model might differ from the built-in models and could affect the chat experience. For example, some models might not support vision or tool calling.

- The Copilot API is still used for some tasks, such as sending embeddings, repository indexing, query refinement, intent detection, and side queries.

- When using your own model, there is no guarantee that responsible AI filtering is applied to the model's output.

Frequently asked questions

Why is bring your own model key not available for Copilot Business or Copilot Enterprise?

Bringing your own model key is not available for Copilot Business or Copilot Enterprise because it's mainly meant to allow users to experiment with the newest models the moment they are announced, and not yet available as a built-in model in Copilot.

Bringing your own model key will come to Copilot Business and Enterprise plans later this year, as we better understand the requirements that organizations have for using this functionality at scale. Copilot Business and Enterprise users can still use the built-in, managed models.